AI tools for open-source testing agents

Related Tools:

Keploy

Keploy is an open-source AI-powered API, integration, and unit testing agent designed for developers. It offers a unified testing platform that uses AI to write and validate tests, maximizing coverage and minimizing effort. With features like automated test generation, record-and-replay for integration tests, and API testing automation, Keploy aims to streamline the testing process for developers. The platform also provides GitHub PR unit test agents, centralized reporting dashboards, and smarter test deduplication to enhance testing efficiency and effectiveness.

Lunary

Lunary is an AI developer platform designed to bring AI applications to production. It offers a comprehensive set of tools to manage, improve, and protect LLM apps. With features like Logs, Metrics, Prompts, Evaluations, and Threads, Lunary empowers users to monitor and optimize their AI agents effectively. The platform supports tasks such as tracing errors, labeling data for fine-tuning, optimizing costs, running benchmarks, and testing open-source models. Lunary also facilitates collaboration with non-technical teammates through features like A/B testing, versioning, and clean source-code management.

RagaAI Catalyst

RagaAI Catalyst is a sophisticated AI observability, monitoring, and evaluation platform designed to help users observe, evaluate, and debug AI agents at all stages of Agentic AI workflows. It offers features like visualizing trace data, instrumenting and monitoring tools and agents, enhancing AI performance, agentic testing, comprehensive trace logging, evaluation for each step of the agent, enterprise-grade experiment management, secure and reliable LLM outputs, finetuning with human feedback integration, defining custom evaluation logic, generating synthetic data, and optimizing LLM testing with speed and precision. The platform is trusted by AI leaders globally and provides a comprehensive suite of tools for AI developers and enterprises.

LangChain

LangChain is a framework for developing applications powered by large language models (LLMs). It simplifies every stage of the LLM application lifecycle, including development, productionization, and deployment. LangChain consists of open-source libraries such as langchain-core, langchain-community, and partner packages. It also includes LangGraph for building stateful agents and LangSmith for debugging and monitoring LLM applications.

Infrabase.ai

Infrabase.ai is a directory of AI infrastructure products that helps users discover and explore a wide range of tools for building world-class AI products. The platform offers a comprehensive directory of products in categories such as Vector databases, Prompt engineering, Observability & Analytics, Inference APIs, Frameworks & Stacks, Fine-tuning, Audio, and Agents. Users can find tools for tasks like data storage, model development, performance monitoring, and more, making it a valuable resource for AI projects.

Robin

Robin by Mobile.dev is an AI-powered mobile app testing tool that allows users to test their mobile apps with confidence. It offers a simple yet powerful open-source framework called Maestro for testing mobile apps at high speed. With intuitive and reliable testing powered by AI, users can write rock-solid tests without extensive coding knowledge. Robin provides an end-to-end testing strategy, rapid testing across various devices and operating systems, and auto-healing of test flows using state-of-the-art AI models.

Haystack

Haystack is a production-ready open-source AI framework designed to facilitate building AI applications. It offers a flexible components and pipelines architecture, allowing users to customize and build applications according to their specific requirements. With partnerships with leading LLM providers and AI tools, Haystack provides freedom of choice for users. The framework is built for production, with fully serializable pipelines, logging, monitoring integrations, and deployment guides for full-scale deployments on various platforms. Users can build Haystack apps faster using deepset Studio, a platform for drag-and-drop construction of pipelines, testing, debugging, and sharing prototypes.

ARC Prize

ARC Prize is a platform hosting a $1,000,000+ public competition aimed at beating and open-sourcing a solution to the ARC-AGI benchmark. The platform is dedicated to advancing open artificial general intelligence (AGI) for the public benefit. It provides a formal benchmark, ARC-AGI, created by François Chollet, to measure progress towards AGI by testing the ability to efficiently acquire new skills and solve open-ended problems. ARC Prize encourages participants to try solving test puzzles to identify patterns and improve their AGI skills.

Rupert AI

Rupert AI is an all-in-one AI platform that allows users to train custom AI models for text, audio, video, and images. The platform streamlines AI workflows by providing access to the latest open-source AI models and tools in a single studio tailored to business needs. Users can automate their AI workflow, generate high-quality AI product photography, and utilize popular AI workflows like the AI Fashion Model Generator and Facebook Ad Testing Tool. Rupert AI aims to revolutionize the way businesses leverage AI technology to enhance marketing visuals, streamline operations, and make informed decisions.

Comfy Org

Comfy Org is an open-source AI tooling platform dedicated to advancing and democratizing AI technology. The platform offers tools like node manager, node registry, CLI, automated testing, and public documentation to support the ComfyUI ecosystem. Comfy Org aims to make state-of-the-art AI models accessible to a wider audience by fostering an open-source and community-driven approach. The team behind Comfy Org consists of individuals passionate about developing and maintaining various components of the platform, ensuring a reliable and secure environment for users to explore and contribute to AI tooling.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

Rainforest QA

Rainforest QA is an AI-powered test automation platform designed for SaaS startups to streamline and accelerate their testing processes. It offers AI-accelerated testing, no-code test automation, and expert QA services to help teams achieve reliable test coverage and faster release cycles. Rainforest QA's platform integrates with popular tools, provides detailed insights for easy debugging, and ensures visual-first testing for a seamless user experience. With a focus on automating end-to-end tests, Rainforest QA aims to eliminate QA bottlenecks and help teams ship bug-free code with confidence.

LanguageGUI

LanguageGUI is an open-source design system and UI Kit for giving LLMs the flexibility of formatting text outputs into richer graphical user interfaces. It includes dozens of unique UI elements that serve different use cases for rich conversational user interfaces, such as 100+ UI components & customizable screens, 10+ conversational UI widgets, 20+ chat bubbles, 30+ pre-built screens to kickoff your design, 5+ chat sidebars with customizable settings, multi-prompt workflow screen designs, 8+ prompt boxes, and dark mode. LanguageGUI is designed with variables and styles, designed with Figma Auto Layout, and is free to use for both personal and commercial projects without required attribution.

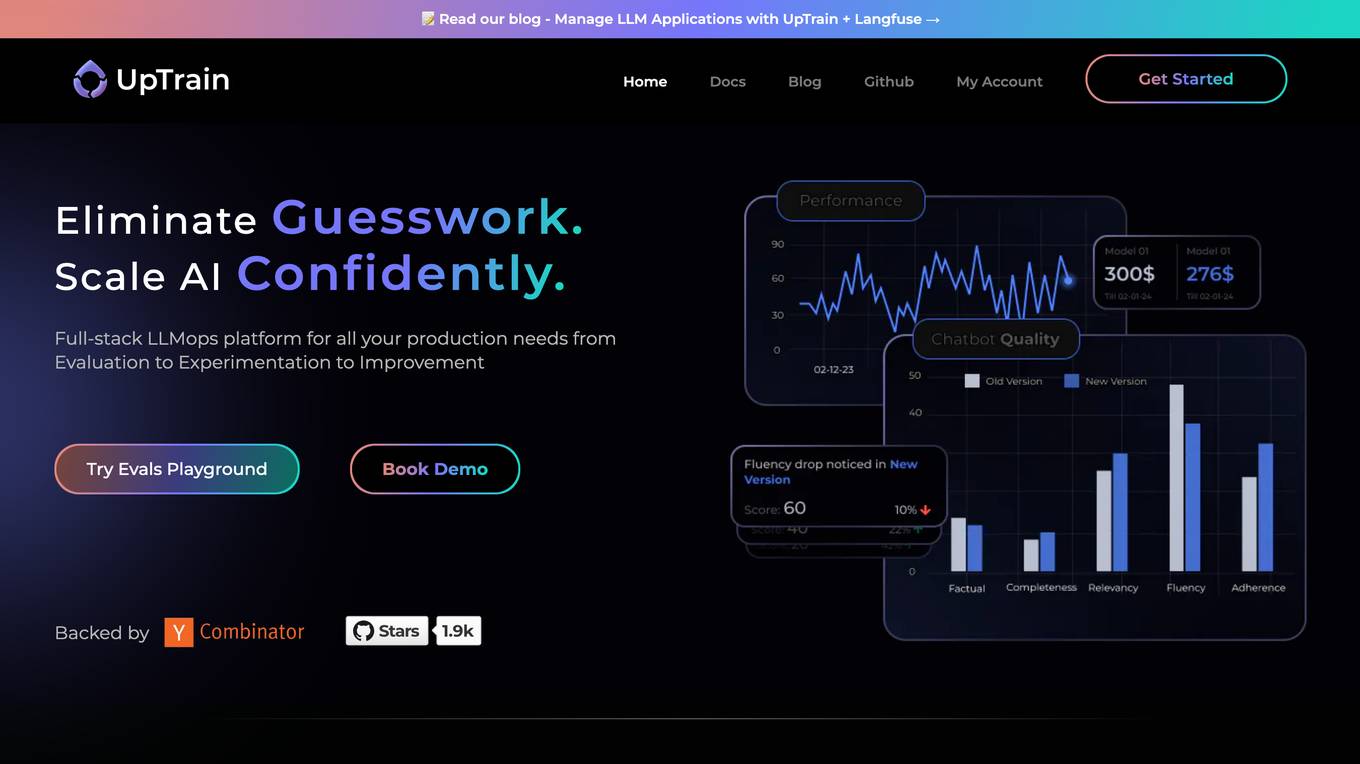

UpTrain

UpTrain is a full-stack LLMOps platform designed to help users confidently scale AI by providing a comprehensive solution for all production needs, from evaluation to experimentation to improvement. It offers diverse evaluations, automated regression testing, enriched datasets, and innovative techniques to generate high-quality scores. UpTrain is built for developers, compliant to data governance needs, cost-efficient, remarkably reliable, and open-source. It provides precision metrics, task understanding, safeguard systems, and covers a wide range of language features and quality aspects. The platform is suitable for developers, product managers, and business leaders looking to enhance their LLM applications.

GptSdk

GptSdk is an AI tool that simplifies incorporating AI capabilities into PHP projects. It offers dynamic prompt management, model management, bulk testing, collaboration chaining integration, and more. The tool allows developers to develop professional AI applications 10x faster, integrates with Laravel and Symfony, and supports both local and API prompts. GptSdk is open-source under the MIT License and offers a flexible pricing model with a generous free tier.

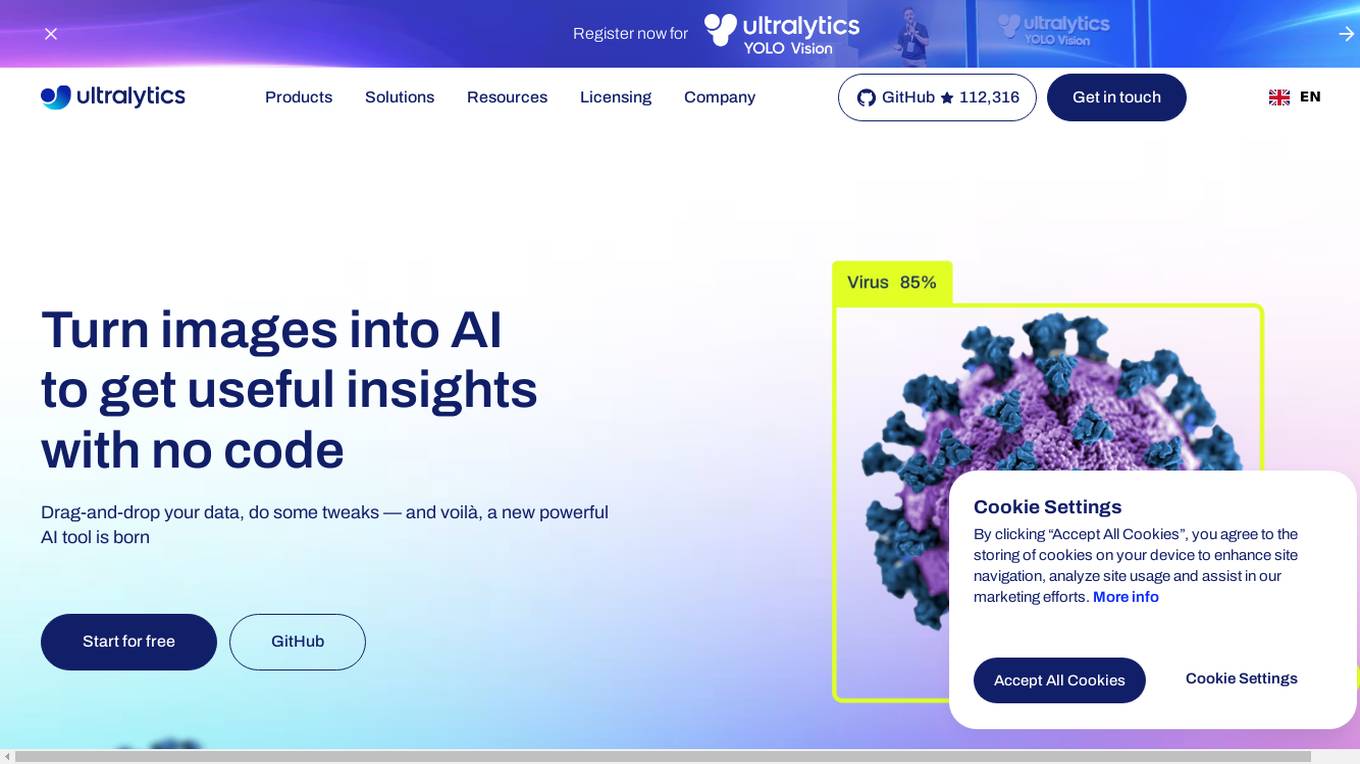

Ultralytics

Ultralytics is an AI tool that revolutionizes the world of Vision AI by enabling users to easily turn images into AI to get useful insights without writing any code. It offers a drag-and-drop interface for data input, model training, and deployment, making it accessible for startups, enterprises, data scientists, ML engineers, hobbyists, researchers, and academics. Ultralytics YOLO, the flagship tool, allows users to train machine learning models in seconds, select from pre-built models, test models on mobile devices, and deploy custom models to various formats. The tool is powered by Ultralytics Python package and is open-source, with a focus on computer vision, object detection, and image classification.

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

Open Source Starter Guide

Open Source Guide for Everyone: First time contributors, maintainers, and the curious.

Open Source Alternative

Find open source alternative to any paid service you can think of

GPT-Info

Extensive guide for ChatGPT models. 🛈 This software is free and open-source; anyone can redistribute it and/or modify it.

AI News Generator

Generates accurate, timely news articles from open-source government data.

EE-GPT

A search engine and troubleshooter for electrical engineers to promote an open-source community. Submit your questions, corrections and feedback to [email protected]

ChadGPT

Dr. Tiffany Love's open source AI boyfriend trained on my Ex's training data he collected during our relationship and filtered to be less of a, well you know

GPT Creation Guide

GPT insights and explanations. 🛈 This software is free and open-source; anyone can redistribute it and/or modify it.

Academic Research Reviewer

Upon uploading a research paper, I provide a concise section wise analysis covering Abstract, Lit Review, Findings, Methodology, and Conclusion. I also critique the work, highlight its strengths, and answer any open questions from my Knowledge base of Open source materials.

Nothotdog

NotHotDog is an open-source testing framework for evaluating and validating voice and text-based AI agents. It offers a user-friendly interface for creating, managing, and executing tests against AI models. The framework supports WebSocket and REST API, test case management, automated evaluation of responses, and provides a seamless experience for test creation and execution.

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

awesome-MLSecOps

Awesome MLSecOps is a curated list of open-source tools, resources, and tutorials for MLSecOps (Machine Learning Security Operations). It includes a wide range of security tools and libraries for protecting machine learning models against adversarial attacks, as well as resources for AI security, data anonymization, model security, and more. The repository aims to provide a comprehensive collection of tools and information to help users secure their machine learning systems and infrastructure.

awesome-AI-driven-development

Awesome AI-Driven Development is a curated list of tools, frameworks, and resources for AI-driven development. It includes AI code editors, terminal-based coding agents, IDE plugins & extensions, multi-agent systems, code generation & templates, testing & quality assurance tools, Model Context Protocol implementations, pull request & code review tools, project management & documentation tools, language models for code, development workflows tools, code search & analysis tools, specialized tools for Git & version control, cloud & DevOps, language-specific tasks, terminal & shell utilities, prompt & context management tools, Copilot extensions & alternatives, learning & tutorials resources, and configuration & enhancement tools for AI coding assistants.

Academic_LLM_Sec_Papers

Academic_LLM_Sec_Papers is a curated collection of academic papers related to LLM Security Application. The repository includes papers sorted by conference name and published year, covering topics such as large language models for blockchain security, software engineering, machine learning, and more. Developers and researchers are welcome to contribute additional published papers to the list. The repository also provides information on listed conferences and journals related to security, networking, software engineering, and cryptography. The papers cover a wide range of topics including privacy risks, ethical concerns, vulnerabilities, threat modeling, code analysis, fuzzing, and more.

LLM4SE

The collection is actively updated with the help of an internal literature search engine.

giskard

Giskard is an open-source Python library that automatically detects performance, bias & security issues in AI applications. The library covers LLM-based applications such as RAG agents, all the way to traditional ML models for tabular data.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

langfuse

Langfuse is a powerful tool that helps you develop, monitor, and test your LLM applications. With Langfuse, you can: * **Develop:** Instrument your app and start ingesting traces to Langfuse, inspect and debug complex logs, and manage, version, and deploy prompts from within Langfuse. * **Monitor:** Track metrics (cost, latency, quality) and gain insights from dashboards & data exports, collect and calculate scores for your LLM completions, run model-based evaluations, collect user feedback, and manually score observations in Langfuse. * **Test:** Track and test app behaviour before deploying a new version, test expected in and output pairs and benchmark performance before deploying, and track versions and releases in your application. Langfuse is easy to get started with and offers a generous free tier. You can sign up for Langfuse Cloud or deploy Langfuse locally or on your own infrastructure. Langfuse also offers a variety of integrations to make it easy to connect to your LLM applications.

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

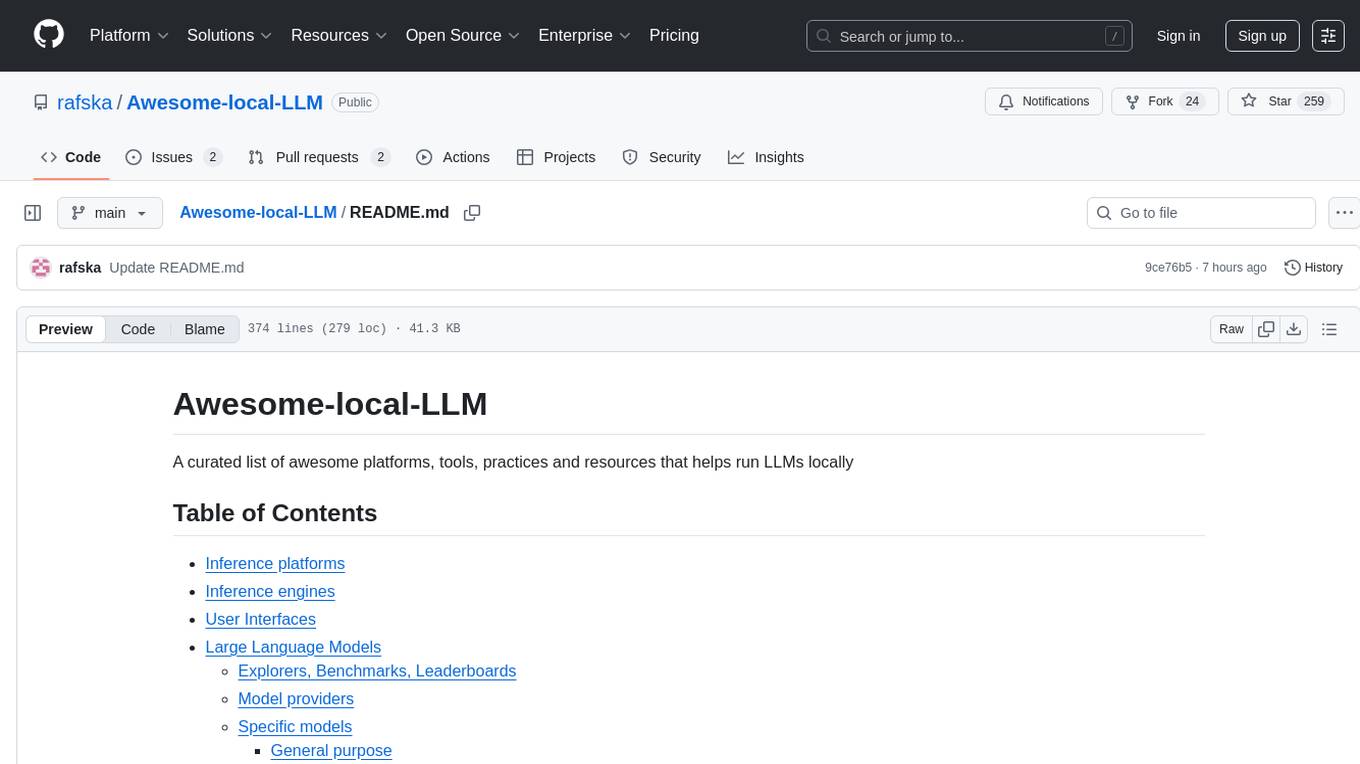

Awesome-local-LLM

Awesome-local-LLM is a curated list of platforms, tools, practices, and resources that help run Large Language Models (LLMs) locally. It includes sections on inference platforms, engines, user interfaces, specific models for general purpose, coding, vision, audio, and miscellaneous tasks. The repository also covers tools for coding agents, agent frameworks, retrieval-augmented generation, computer use, browser automation, memory management, testing, evaluation, research, training, and fine-tuning. Additionally, there are tutorials on models, prompt engineering, context engineering, inference, agents, retrieval-augmented generation, and miscellaneous topics, along with a section on communities for LLM enthusiasts.

llm_benchmarks

llm_benchmarks is a collection of benchmarks and datasets for evaluating Large Language Models (LLMs). It includes various tasks and datasets to assess LLMs' knowledge, reasoning, language understanding, and conversational abilities. The repository aims to provide comprehensive evaluation resources for LLMs across different domains and applications, such as education, healthcare, content moderation, coding, and conversational AI. Researchers and developers can leverage these benchmarks to test and improve the performance of LLMs in various real-world scenarios.

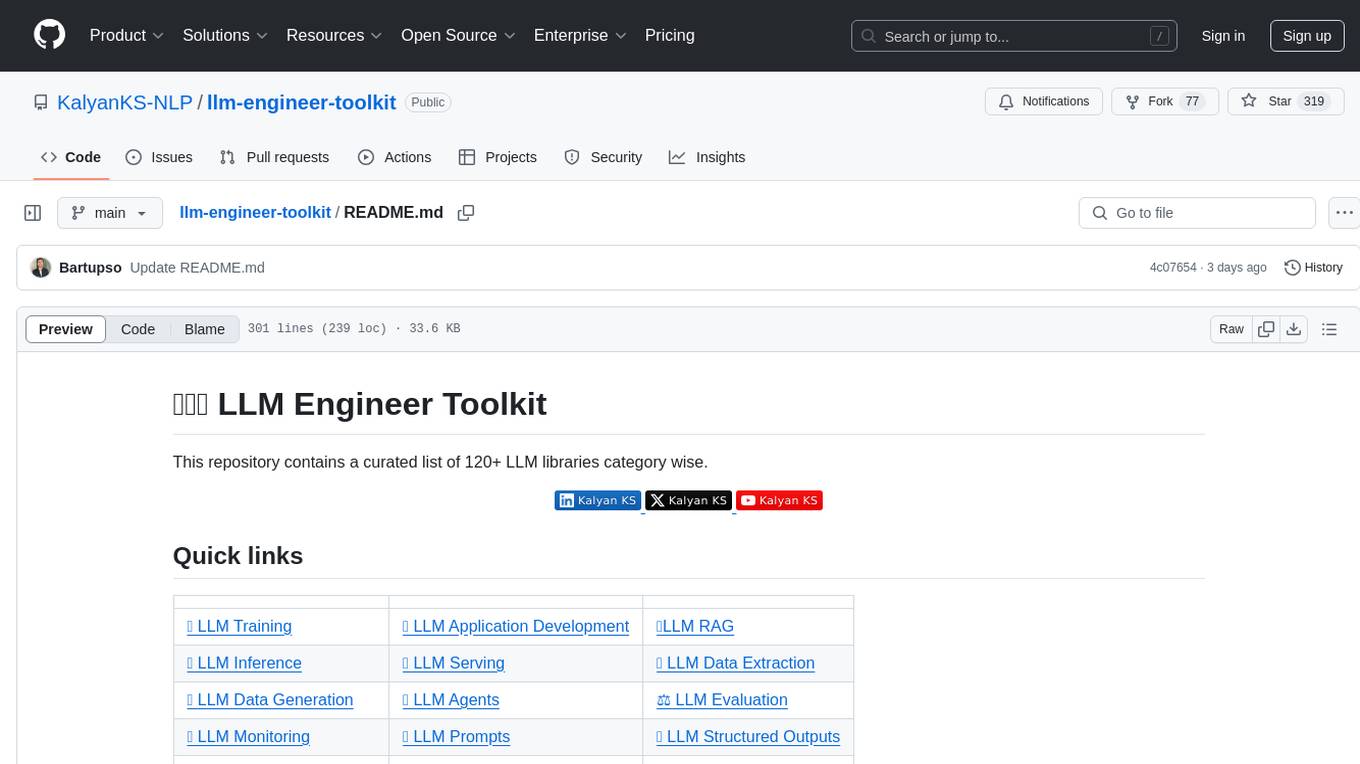

llm-engineer-toolkit

The LLM Engineer Toolkit is a curated repository containing over 120 LLM libraries categorized for various tasks such as training, application development, inference, serving, data extraction, data generation, agents, evaluation, monitoring, prompts, structured outputs, safety, security, embedding models, and other miscellaneous tools. It includes libraries for fine-tuning LLMs, building applications powered by LLMs, serving LLM models, extracting data, generating synthetic data, creating AI agents, evaluating LLM applications, monitoring LLM performance, optimizing prompts, handling structured outputs, ensuring safety and security, embedding models, and more. The toolkit covers a wide range of tools and frameworks to streamline the development, deployment, and optimization of large language models.

pentagi

PentAGI is an innovative tool for automated security testing that leverages cutting-edge artificial intelligence technologies. It is designed for information security professionals, researchers, and enthusiasts who need a powerful and flexible solution for conducting penetration tests. The tool provides secure and isolated operations in a sandboxed Docker environment, fully autonomous AI-powered agent for penetration testing steps, a suite of 20+ professional security tools, smart memory system for storing research results, web intelligence for gathering information, integration with external search systems, team delegation system, comprehensive monitoring and reporting, modern interface, API integration, persistent storage, scalable architecture, self-hosted solution, flexible authentication, and quick deployment through Docker Compose.

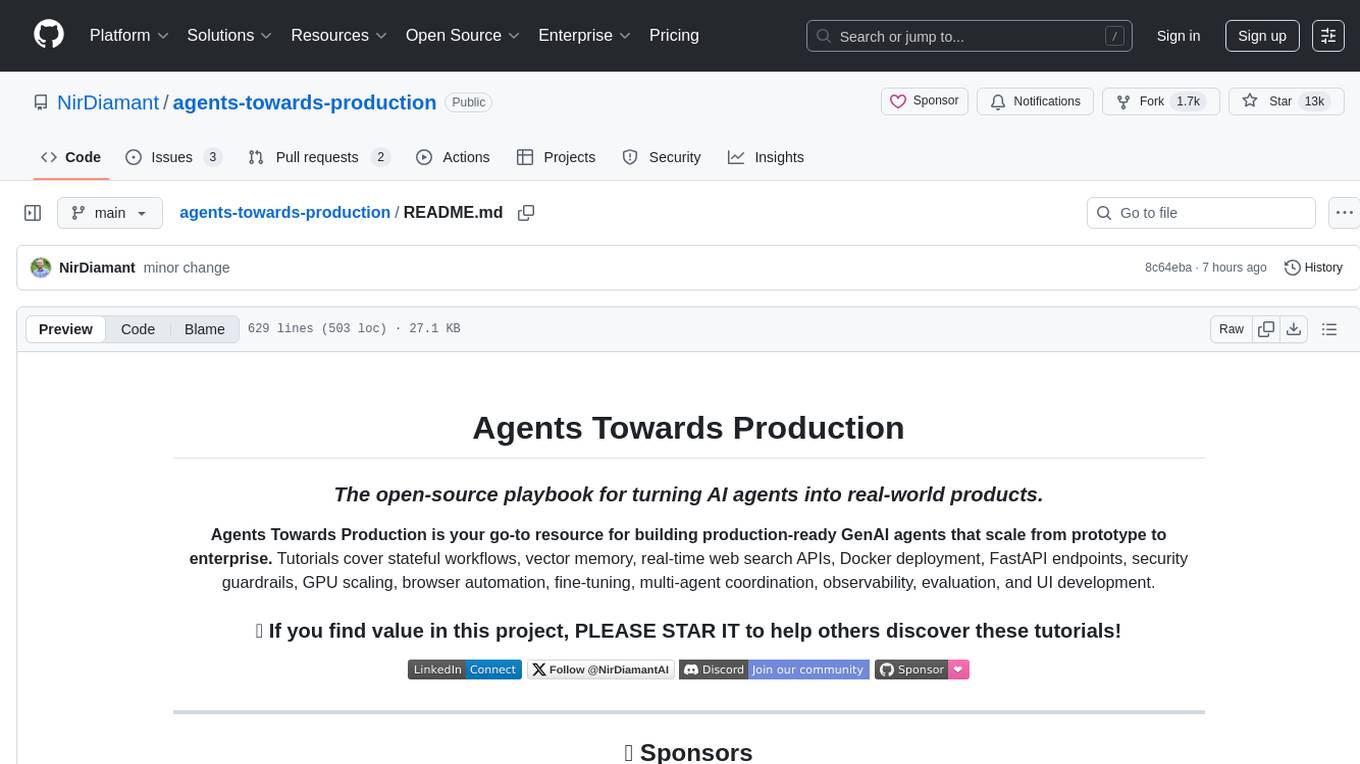

agents-towards-production

Agents Towards Production is an open-source playbook for building production-ready GenAI agents that scale from prototype to enterprise. Tutorials cover stateful workflows, vector memory, real-time web search APIs, Docker deployment, FastAPI endpoints, security guardrails, GPU scaling, browser automation, fine-tuning, multi-agent coordination, observability, evaluation, and UI development.

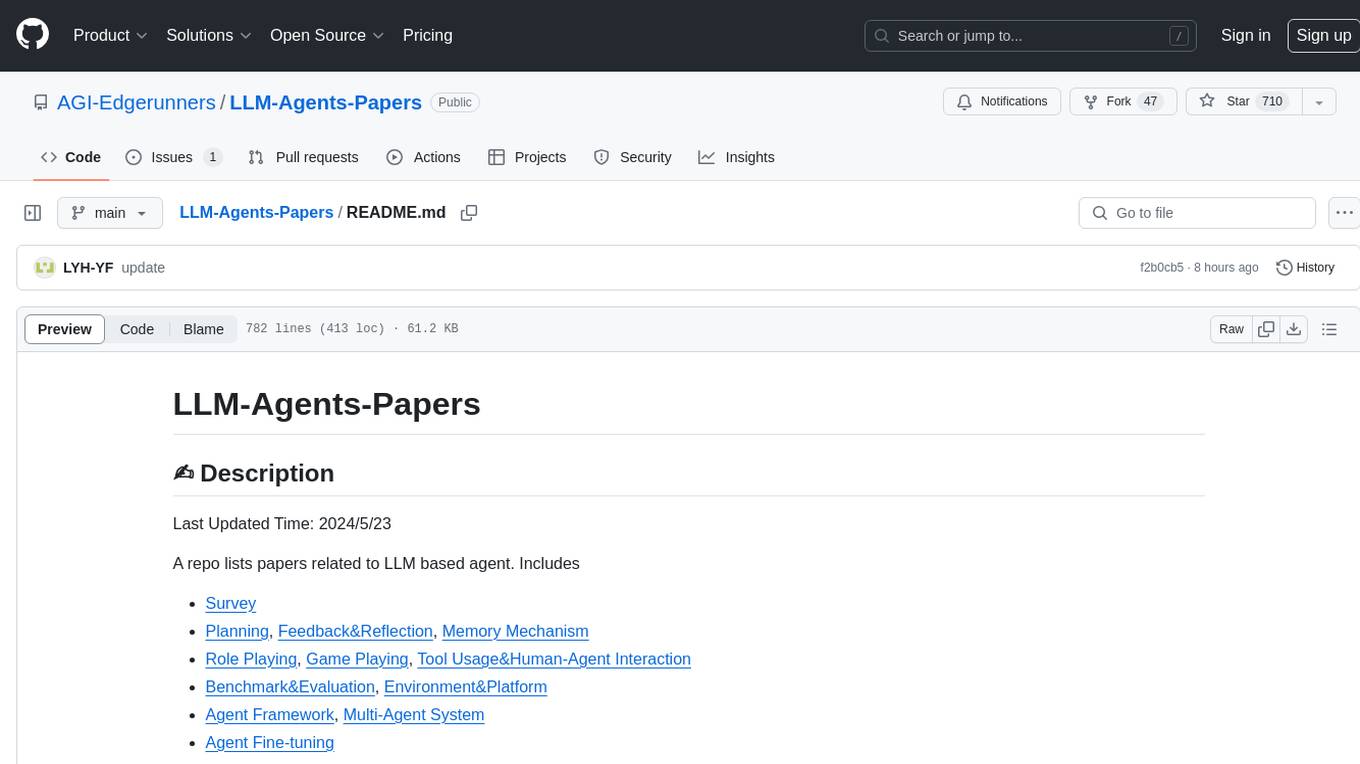

LLM-Agents-Papers

A repository that lists papers related to Large Language Model (LLM) based agents. The repository covers various topics including survey, planning, feedback & reflection, memory mechanism, role playing, game playing, tool usage & human-agent interaction, benchmark & evaluation, environment & platform, agent framework, multi-agent system, and agent fine-tuning. It provides a comprehensive collection of research papers on LLM-based agents, exploring different aspects of AI agent architectures and applications.

NotHotDog

NotHotDog is an open-source platform for testing, evaluating, and simulating AI agents. It offers a robust framework for generating test cases, running conversational scenarios, and analyzing agent performance.

Maige

Maige is an open-source infrastructure designed to run natural language workflows on your codebase. It allows users to connect their repository, define rules for handling issues and pull requests, and monitor the workflow execution through a dashboard. Maige leverages AI capabilities to label, assign, comment, review code, and execute simple code snippets, all while being customizable and flexible with the GitHub API.